Table of Contents

- Overview

- Software Architecture and Design

- The “Window of Opportunity” Problem

- Websocket Integration to Interact with Showdown

- Active Monitoring Scripts to Handle Twitch Burst Data

- Control Logic and Move Processing

- Custom Client and UI

- 24/7 Twitch Stream with Google Cloud Infrastructure

- Cost Analysis + Profit Margin Breakdown

- The “Chicken and the Egg” Problem

- Biggest Mistakes

- Future Work

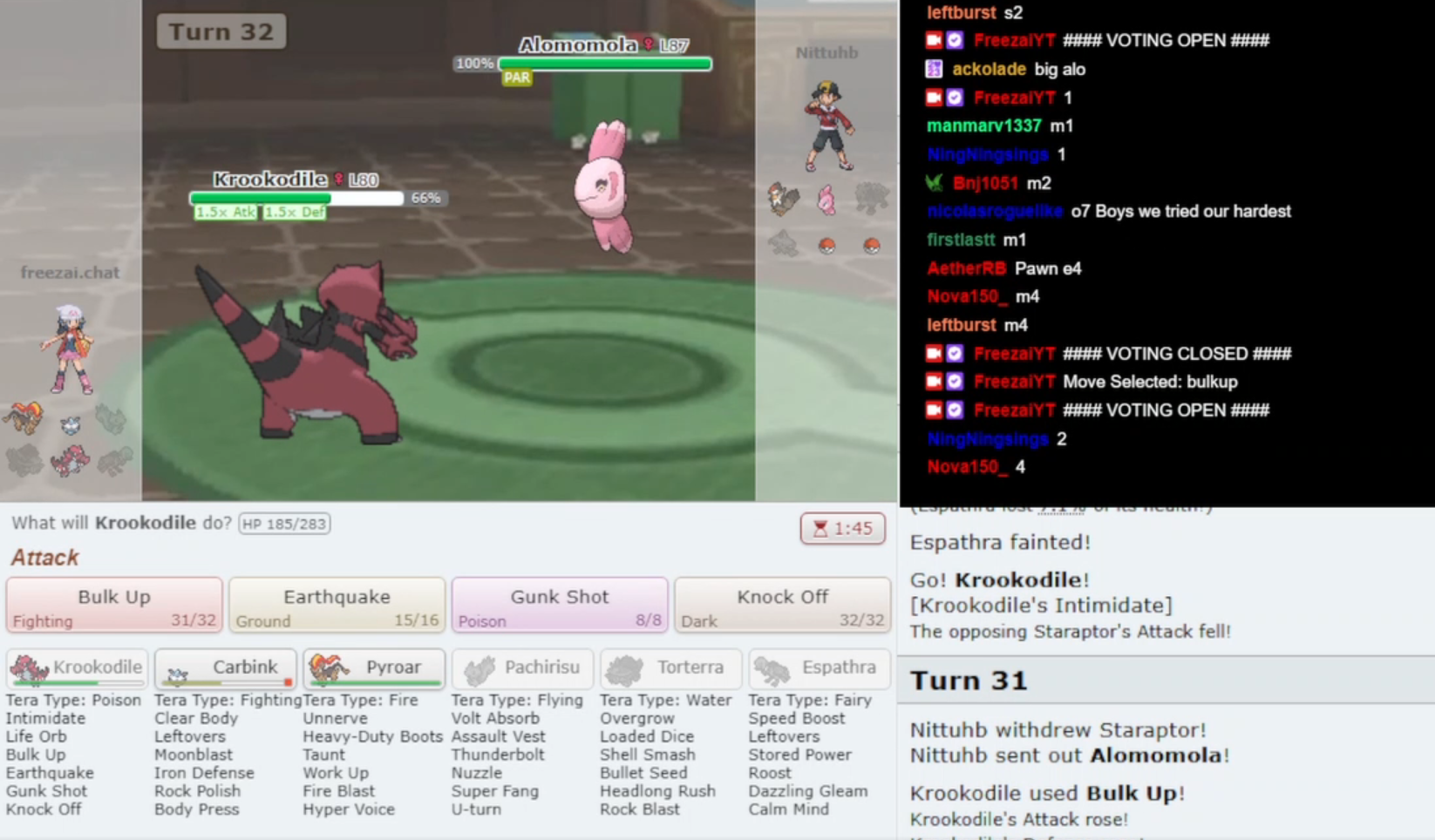

Overview

A website remotely controlled by Twitch chatters.

“Twitch Plays” is a genre of crowdsourcing where many individuals (called chatters) input moves to a live-streaming platform (Twitch) and control a game together. Made famous by the viral “Twitch Plays Pokemon” event in 2014, “Twitch Plays” is a fascinating social experiment as thousands of people control one game and try to beat it together.

“Twitch Plays Showdown” is my rendition of this genre, focusing on Pokemon Showdown, a browser-based, competitive, turn-based game, where chatters control Pokemon battles 24/7 vs human competitors. In a Pokemon battle, a user has the ability to either make an “attack” or a “switch”, with modifiers such as a “tera attack.” Chatters type in their moves into the Twitch Chat and then based on these inputs a decision is made in the actual game. In this way, they will queue up games on the global ladder, and try to win as many games as possible to improve their ladder rank.

Some of the primary technical challenges include:

- Interacting with the browser game using SSL Websockets

- Reading and parsing the Twitch chat

- Google Cloud infrastructure management

I expand on the problems, solutions, and difficulties faced below. This article mainly focuses on the technical implementation details, but there are sections about the economics and other non technical factors.

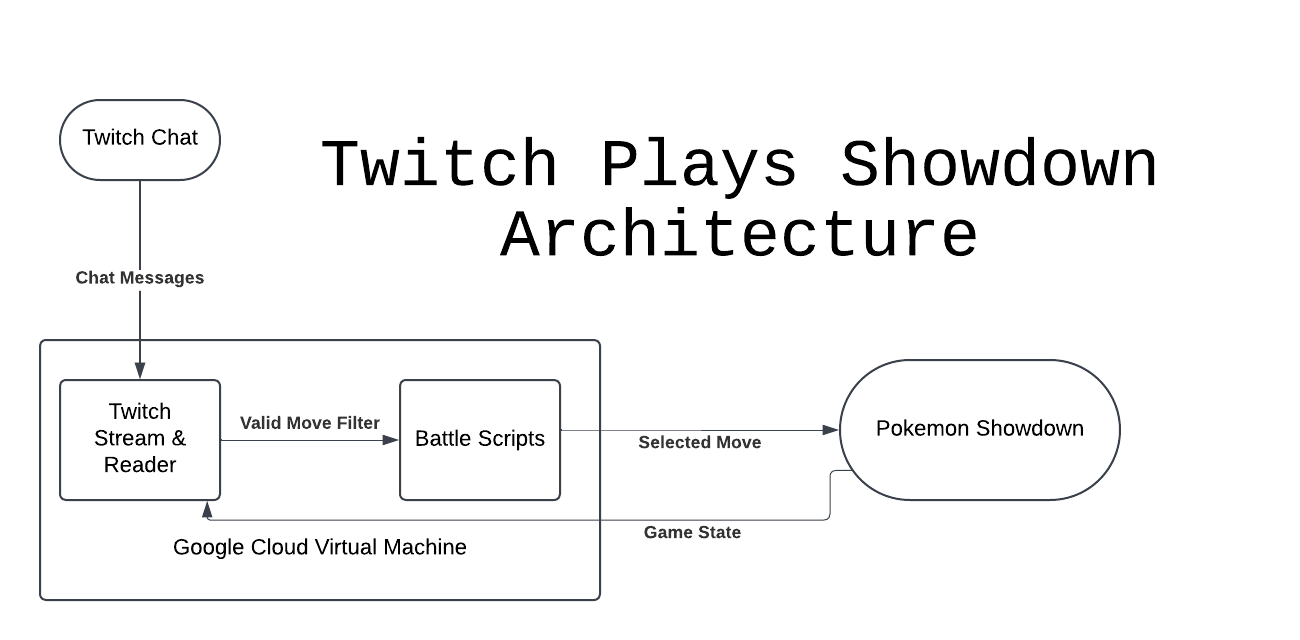

Software Architecture and Design

The fundamental design is about being able to read chat messages, parse them, and then send a decision to Pokemon Showdown based on these messages. The two most important qualities of this program are latency and security.

- Latency. The architecture has to be designed to minimize latency because the quality of user experience is strictly dependent on the amount of input lag. This requires efficient data handling, message queuing, and quick feedback loops. Most Twitch streams are latency insensitive, it doesn’t matter if a stream is delayed by 3 seconds or 7. But in a real time application like this one, every millisecond matters.

- Security. The design also has to be resilient to unforeseen circumstances and malicious actors. Inherently, the chat will be able to remotely control decisions on an external website. All chat inputs must be safely quarantined and processed to avoid unwanted behaviors like injection attacks.

- Chat

- UI interface for sending chat messages to the Twitch Stream

- Stream and Reader

- Displays the stream visuals to the user

- Processes chat messages and decides whether chat messages sent are valid moves or not

- Responsible for interpreting game state to decide when chat messages are valid

- Battle Script

- Receives valid chat messages and aggregates them based on frequency

- Makes the final decision on what move to select based on its selection instructions

- Pokemon Showdown

- Server where the games are actually being played

- Receives the selected move through websockets and sends it to the server

- Returns the opponent’s move made and changes to game state

- Google Cloud Virtual Machine

- Hosts the Twitch stream and battle scripts to be run 24/7

- Unnecessary if run locally

The details of implementation are expanded on in further sections.

The Window of Opportunity Problem

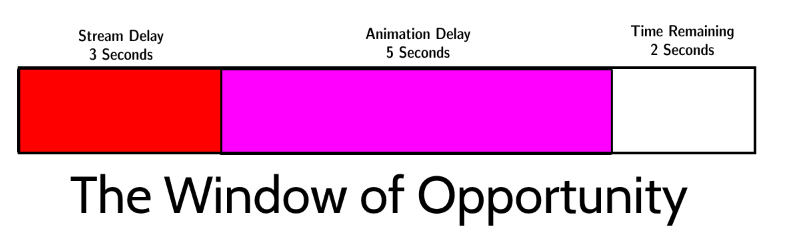

Pokemon Showdown has a battle timer of 180 seconds + 10 seconds per move, with a battle having a theoretical infinite number of turns. In practice, battles on average last 30 turns, with games lasting 100 turns being a realistic outcome. That means you have to make every move in 10 seconds. Here’s what that looks like in the base case.

Inherently, there’s a delay between the uploader’s stream and the viewer’s stream called stream delay. Stream delay depends on the host’s internet speed as well as the viewer’s internet speed. On our end, we can (and do) invest in premium infrastructure to minimize our output latency, but we cannot control our viewers’ latency, nor will latency ever be 0. Empirically, stream delay in both the best and median case scenario is \~3 seconds, with some viewers having a delay of 7+ seconds.

There is also animation delay, the time taken to display the battle animations. The battle animations, on average, take 5 seconds, with certain turns taking even longer if there happens to be many animations that need to be resolved that turn. Animations can be turned off, and I tried doing that, but the viewer experience is so miserable that disabling animations entirely is off the table.

In total, that means 8 out of your 10 seconds to make a move is eaten up by lag or delay, leaving users 2 seconds to make a move. Obviously, this is unviable. The core design challenge is about expanding this window of opportunity to last more than 2 seconds. I have designed some solutions and workarounds to solve this problem.

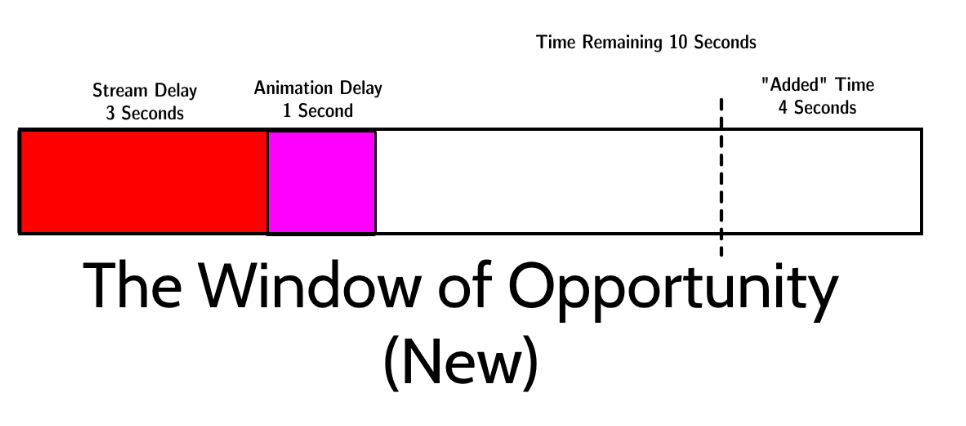

- Custom UI. Using a Custom UI to minimize animation time. Pokemon Showdown is open-source, and they provide a user interface (the client) to connect to their server. Instead of using their client, I can modify their user interface by creating a custom client and speeding up the client-side animations, reducing animation delay from 5 seconds to 1 second. This idea is expanded upon in the Custom Client and UI section.

- “Cheating” the timer. Through trial and error, I noticed that the timer actually rounds down the amount of time used. I.E. if you use 14 seconds, it’s counted as using 10 seconds! We can take advantage of this to add 4 seconds more per move.

- Variable move timers. As mentioned earlier, we have a bank of time at the start and an increment every move. If we use more time than we gain, it eventually converges to running out of time. But what if we take advantage of the bank? Instead of strictly taking 14 seconds per turn, we can take more time per move in the beginning, and then as we deplete our bank, we switch to more frugal time allocations. My current allocation allots 25 seconds for Move 1 (the most important move for long term planning) and then slowly decrements the time per move until it reaches 14 seconds. The idea is to create the most number of turns that have an expanded move timer.

In total, instead of having 2 seconds per move, you now have around 10. This is not perfect and there are still fundamental limitations.

For one, we cannot control the viewer’s internet speed; if their system is below median, they could have lag times that make the game unplayable no matter what we do. Also, even in the best case scenario, a viewer has to make decisions in under 10 seconds, which may or may not be palatable to them. A majority of viewers are not intimately aware of Showdown timer mechanics, so a short turn may be perceived as developer incompetence. However, all in all, this is a significant improvement and changes the game from unplayable to manageable for a large subset of players.

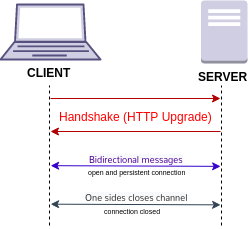

Websocket Integration for Pokemon Showdown

We can communicate with Pokemon Showdown through websockets. At its core, we set up a connection and then send over command messages to Pokemon Showdown. Pokemon Showdown then processes these commands. Like any API, we do not need to know how these commands operate internally, we only need to know what commands we have access to. This project used Python and the asyncio, websockets, and requests library.

async def create(cls, username, password, address):

self = PSWebsocketClient()

self.username = username

self.password = password

self.address = address

self.websocket = await websockets.connect(self.address)

self.login_uri = "https://play.pokemonshowdown.com/action.php"

return self

As a trivial example to illustrate how this works, saving a replay of a battle involves merely sending over the /savereplay command.

async def save_replay(self, battle_tag):

message = ["/savereplay"]

await self.send_message(battle_tag, message)

Similarly, we can create other functions for starting battles, making moves, etc based on the Pokemon Showdown documentation available for commands.

Slightly more interesting is how we receive messages. We have to set up a listener with the .recv() function that runs in a while loop to constantly listen for messages from the Pokemon Showdown websocket. We use these messages to update the game state.

async def receive_message(self):

message = await self.websocket.recv()

return message

while True:

msg = await ps_websocket_client.receive_message()

### Parsing the msg

Overall, the implementation of websockets is relatively trivial. We stand on the shoulders of giants, and most of the heavy lifting is already done for us, all we need to do is use an API.

Active Monitoring Scripts and Managing Twitch Burst Data

The next technical step was creating a reader that reads the Twitch Chat and parses the messages.

Twitch OAuth

The first step is setting up a Twitch Bot that has permissions to read (and write, which we do use but is not necessary) your chosen Twitch Chat. The process is relatively involved, but Twitch provides a step-by-step guide on how to do it https://dev.twitch.tv/docs/authentication/. The key idea is that we use HTTP requests to exchange our Client Secret and ID for an authorization code, and then exchange that code for our access tokens.

Following their recommendations, we have an authorization token that expires every few hours, and an automatic trigger to use a refresh token to refresh our authorizations when that happens.

def get_authorization_code():

"""

Prompts the user to navigate to the Twitch authorization URL to grant permissions.

"""

auth_url = f"{AUTH_URL}?response_type=code\&client_id={CLIENT_ID}\&redirect_uri={REDIRECT_URI}\&scope=chat:read+chat:edit"

print("Navigate to this URL to authorize:")

print(auth_url)

code = input("Paste the authorization code from the URL here: ").strip()

return code

def exchange_code_for_tokens(auth_code):

"""

Exchanges the authorization code for access and refresh tokens.

"""

payload = {

'client_id': CLIENT_ID,

'client_secret': CLIENT_SECRET,

'code': auth_code,

'grant_type': 'authorization_code',

'redirect_uri': REDIRECT_URI

}

response = requests.post(TOKEN_URL, data=payload)

response_data = response.json()

if 'access_token' in response_data:

print("Access token and refresh token received successfully.")

return response_data

else:

print("Error:", response_data)

return None

Once you have OAuth set up, you have a bot who has the ability to read and write from a chosen Twitch chat.

Parsing Chat Messages

The next important phase is parsing chat messages. There are two important facets here: user accessibility and security. The message that a chatter has to send must be intuitive and the chat message reader has to be fluid enough to accept all sorts of mistakes, while still being deterministic. These messages also have to be secure! Imagine if a group of malicious actors could coerce the software to send all sorts of heinous commands. One command that comes to mind is /whois, which leaks the IP address of the user.

I implemented security by restricting the commands that can be sent through chat. The Twitch Chat Reader receives knowledge of the game state from Pokemon Showdown and generates a list of acceptable commands for that particular gamestate. For example, if there are 9 possible moves in a particular state, the reader only accepts messages that correlate to one of those moves. Chat messages are either coerced into one of the eligible moves, or rejected entirely.

To use the first move of a Pokemon, you have to either type

1, m1, M1 or movename

Similarly, to switch a pokemon you do

s2, S2, or switch 2 or switch pokemonname

If you type a move name, I use Levenshtein distances to measure how similar the message is to a valid option, and if it crosses the similarity threshold, it gets accepted.

E.g if the move is Ice Beam, icEBEem would be matched to Ice Beam and accepted as a valid move.

The goal is to be as permissive as possible for what the user wants to do; they should not have to fumble around looking for the right syntax. Anything they could intuitively think of is a valid option. Empirically, the m1 and s1 syntax were most preferred by users.

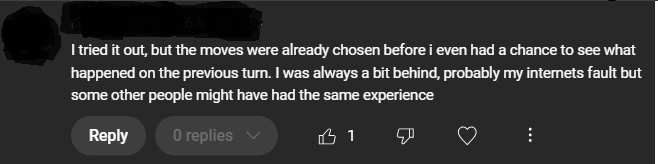

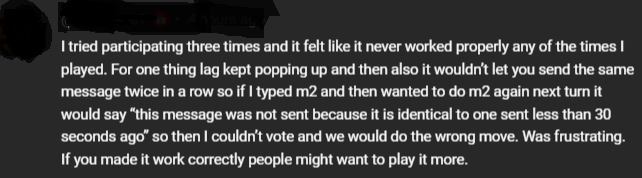

Chat Reader Issues

Furthermore, Twitch implements an anti-spam filter that prevents the same message from being sent within 30 seconds. This is, by Twitch’s design, impossible to get around and having multiple ways to make the same move gives users options to get around the issue. This behaviour is documented in our Twitch Chat Rules, the instructions page, and the bot documentation file.

The user is always right; if something doesn't work for them, the onus is on the host to fix it. User interfaces can be made easier to understand, behaviours can be made more intuitive, error messages can be made more clear, etc.

In practice, this was a major problem. A large minority of users would get stymied by the anti-spam filter and either complain, leave, or both. Admittedly, having to switch between say, m2 and M2, is not intuitive, and expecting anyone to read an instructions page or a rules page is too large of an ask. The ideal flow for a large community project is that anyone can immediately understand what to do, and the instruction page is only for added context. Once important context is put away in a Rules page, you’ve already lost. This is one of the bigger loose ends that this project has. It needs either an automatic way to get around the anti-spam filter (without violating Twitch Terms of Service) or a better way to disseminate information about how this behaviour works and how to work around it.

Lag Catcher

The finishing touch is what I call the Lag Catcher. Imagine a chatter Alice who thinks she’s sending her move at 0:13 in Turn 1. In reality, she’s operating on a 3 second lag and she sent that move at 0:16 in Turn 2. We don’t want moves meant for Turn 1 impacting decisions for Turn 2. We hold the start of every turn for 5 seconds, overlapping with stream and animation delay, to prevent lagged moves from the previous turn affecting the next turn.

State Management

The Twitch Chat Reader is also responsible for knowing when to read moves, and when not to read moves. There are 3 possible game states that the Reader is responsible for understanding. Architecturally, you could justify the use of another state manager, but I decided that it was simple enough to keep it all in one place.

| State | Action |

|---|---|

| Game is Running, Make a Move | Parse Chat Messages for X seconds |

| Game is Running, Can’t Make Move | Do Nothing, ignore messages |

| Game is Not Running | Queue New Game |

Every chat message is parsed and their count is stored in a dictionary. When it’s time, the dictionary is then passed to the Battle Script for processing.

Control Logic and Move Processing

The chat reader passes in the counter of the most popular moves. It’s now the Battle Scripts job to decide what final move to select, and send that to Pokemon Showdown.

Move Selection Strategies

- Winner Takes All. A simple majority rules vote where the most voted for move is selected.

- Probabilistic Distribution. A random move is selected from a weighted average. The more common moves are more likely to get selected, but there’s still a high degree of randomness.

A more robust analysis would do an A/B test of the two and decide which one is better on user satisfaction metrics, but I decided that Winner Takes All was more interesting to me. However, it could be argued that 2. is more in the spirit of Twitch Plays. Twitch Plays has always been about chaos and overcoming randomness, not avoiding it when it becomes inconvenient. 1. is more of a representation of the most common opinions, which isn’t necessarily interesting.

From Server to Showdown

The selection method is used as a parameter for a function that calculates a selected move, formats it into the form of a command, and then sends it to Showdown.

def format_decision(battle, decision):

if decision.startswith(constants.SWITCH_STRING + " "):

message = "/switch {}".format(pkmn.index)

else:

message = "/choose move {}".format(decision)

if len(decision) >= 4 and decision[-4:] == 'tera':

message = message[:-4] + constants.TERASTALLIZE

return [message, str(battle.id)]

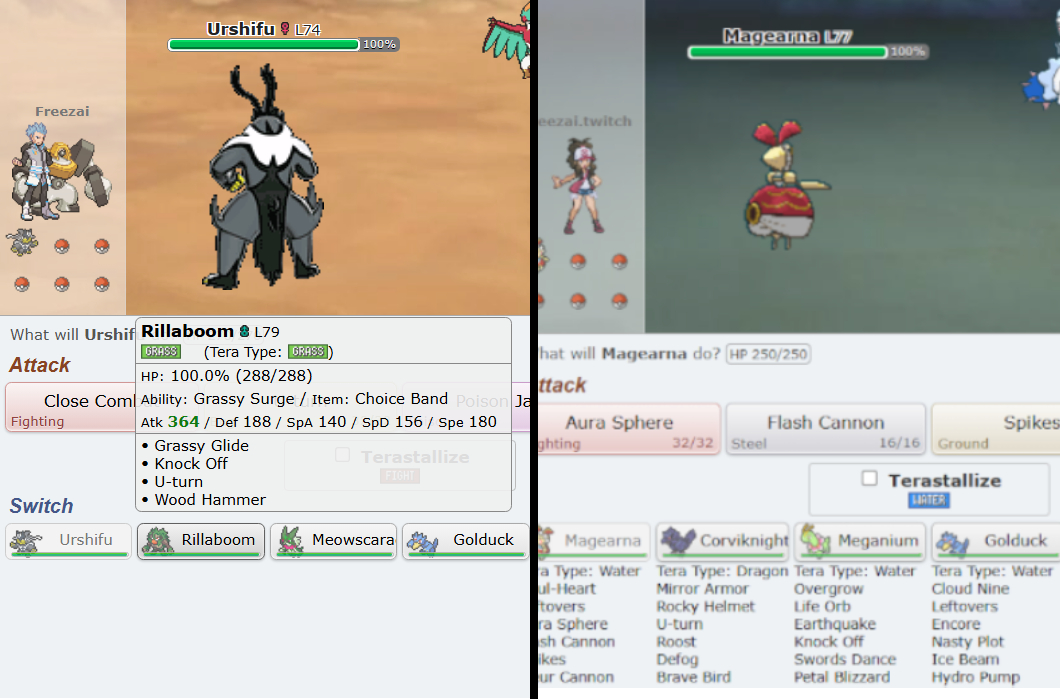

Custom UI and Client

Pokemon Showdown has a fantastic UI. It’s able to display large amounts of meaningful information effectively and intuitively. However, certain aspects of the client, namely its use of onHover, do not fit a Twitch Plays use-case. Fortunately, because Showdown is open-source, we can modify the PS-Client and UI to suit our needs.

Client Modifications

-

Fast Animations. As mentioned earlier, Showdown’s animations roughly take around 5 seconds, which is unacceptable. On the client side we can create faster animations by manipulating a property known as messageFadeTime, which governs how fast animations get played.

case 'blitzanims': if (this.checkBroadcast(cmd, text)) return false; var blitzanims = (toID(target) === 'on'); Storage.prefs('blitzanims', blitzanims); this.add('Fast animations ' + (blitzanims ? 'ON' : 'OFF') + " for next battle."); for (var roomid in app.rooms) { var battle = app.rooms[roomid] && app.rooms[roomid].battle; if (\!battle) continue; battle.resetToCurrentTurn(); } return false; messageFadeTime = Dex.prefs('blitzanims') === true ? 40 : 300; -

onHover issues. Showdown extensively takes advantage of onHover to display key information to players when they hover over the element. Obviously, a program taking inputs from a chat doesn't have the ability to hover over elements. My custom UI takes the most relevant onHover information and displays them directly on the screen. Not every piece of information can fit on the screen -- it’d be too cluttered -- so I made an executive decision about which pieces of information are the most important and decided to ignore the rest.

A comparision of the two UIs.

- Automated behaviors. This one is simple, we automatically close battles after they’re done. Ordinarily, closing battles is done manually by the user, but our situation means that we immediately move onto the next game so we need that automated. In general, a lot of human comforts are not needed and could be sacrificed for speed/performance, but this is the only I’ve implemented so far.

24/7 Twitch Stream and Google Cloud Infrastructure

Infrastructure Requirements

The project is essentially a Twitch stream and a set of python scripts bundled together. For short bursts, running a script and these streams locally is feasible but a 24/7 Twitch Stream presents a unique set of challenges. If it was just a python script, I could put it on a cheap server and let it run, but a Twitch stream complicates things. We not only need something that can physically run a 24/7 stream at high quality, we also need unfettered internet access to accomplish this. A small note is that we also need to reset the stream every 48 hours because Twitch has a maximum length of 48 hours per stream. This can be done automatically, but I chose not to implement it because I’d be checking in often enough anyway and I could do it manually instead.

Choosing a VM and Specifications

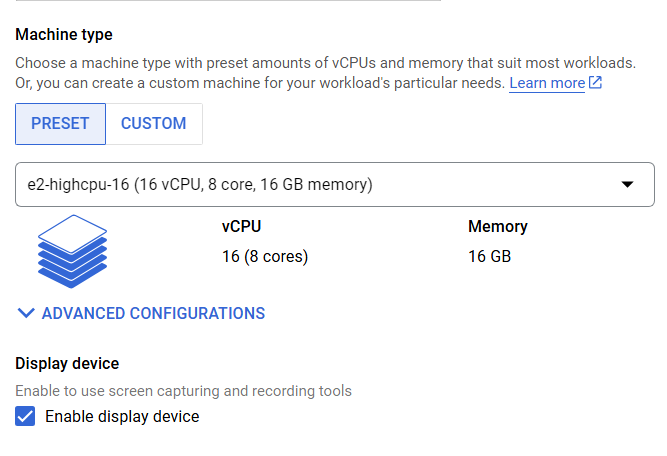

The logical solution was to use a Virtual Machine that I can use through a remote desktop connection. Streaming at 1080p requires either high computer power, or support from a GPU. GPU’s are much more expensive, so it made more sense to provision a high power CPU. RAM is not much of a concern for this operation, and Python scripts used negligible amounts of compute. I opted for a 8vCPU, 8 GB of RAM build.

Initially I tried using a Linux VM, but it doesn’t come naturally with a GUI (which we need for a stream). Tacking on additional packages made 1080p streaming way too laggy. Instead I opted for a Windows VM, which has an innate GUI and, from my experience, was a lot less laggy. Be warned though, a Windows VM is significantly more expensive because you also need to pay for a Windows License.

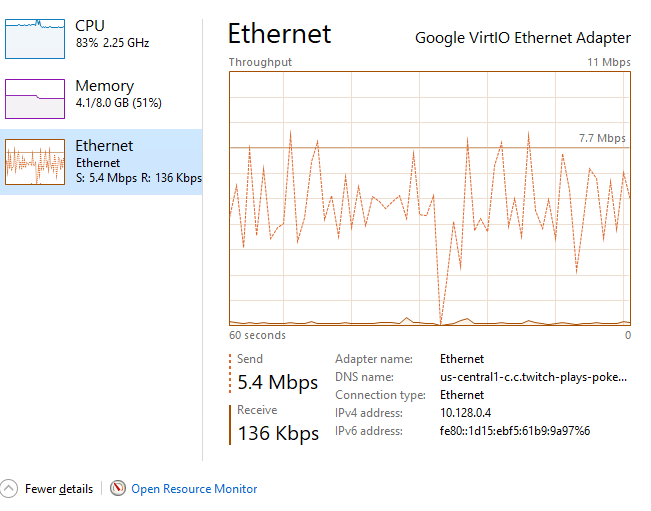

Performance on 8vCPU + 8 GB RAM. Notice the high CPU Usage

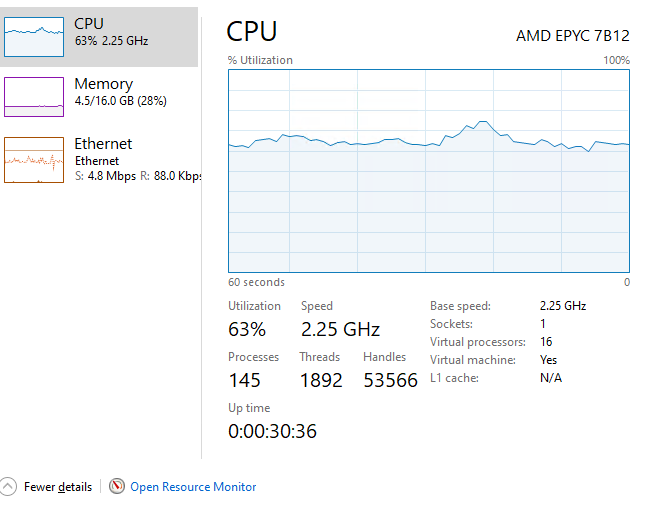

Unfortunately, 8 vCPU and 8 GB of RAM were not enough! The CPU usage hovered around 80% usage, but the main issue was when CPU usage happened to spike. When CPU usage spikes and hits 100%, our video recording application momentarily lags, creating a moment of stream delay on our end. Over the course of a stream, these moments add up until the stream is lagging by several or more seconds. Again, this doesn’t matter for a normal stream, but is incredibly deadly for a stream that needs to be as real-time as physically possible. While expensive, I did upgrade to a 16vCPU set-up, which was able to handle the load much more comfortably, with 0 stream delay coming from the server’s end.

Performance on 16vCPU + 16 GB RAM.

Specifications for the VM.

Internet comes from high-speed Ethernet and incurs extra charge for egress traffic.

An alternate approach is to buy a physical server, i.e. A laptop. Running the stream through a laptop would save me monthly server costs, but could wear the laptop out over time and get me an angry letter from my ISP. This is an option for the future because this only breaks even if I’m running this for a few months.

Cost Analysis

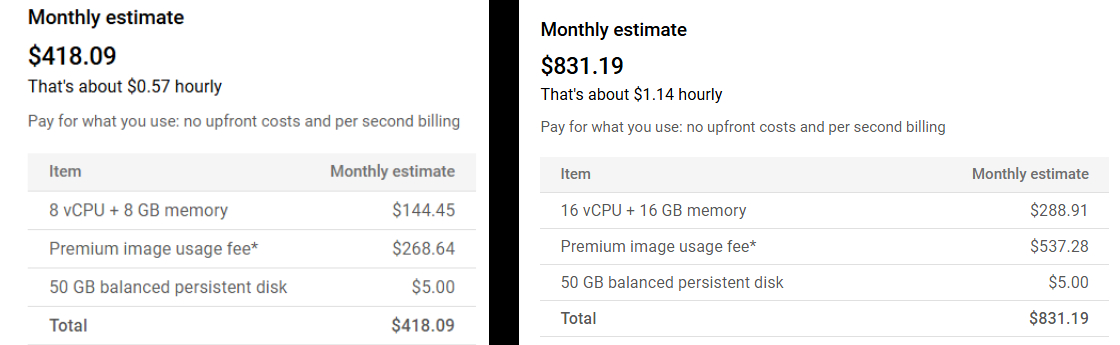

Server Costs

Every day, the cost of running the server can be divided into the server + internet costs. The server costs scale proportionally with the amount of vCPUs and RAM you require. Additionally, there is an additional fee for using a Windows VM, because you have to pay for a license. In total it costs \~$1 an hour to run the server.

As you can tell, the Windows VM cost is ridiculous.

My suspicion is that I must be overpaying, and there’s a senior developer reading this and facepalming as I burn money, but I could not find a cheaper way to provision enough resources that could handle the high demands of streaming and encoding video data. I also rationalized the cost because the revenue would exceed costs anyway. But breaking even is not an excuse to not become as lean and efficient as possible. Wasted money is wasted money. This is something I need to figure out immediately; that either I can bring costs down. or yes, this is in fact the cost of streaming real-time 1080p video. I expand on the difficulties faced here in the Biggest Mistakes section.

Fortunately, video data is much cheaper than I anticipated. Twitch streaming comes under In-Network traffic, and internet costs were negligible.

Server Revenue

The twitch stream makes money from primarily two sources: ad revenue and Twitch “Subscribers”, which are people who donate money to the stream.

Ad Revenue

Ad revenue is consistent and is a function of

- Number of ads displayed (We control this)

- Number of viewers

- Ad revenue season (Ad value changes depending on the time of year. E.G ad rates skyrocket before Christmas)

Subscriber Revenue

Twitch Subscribers are variable per day but can be amortized over a long period of time. The number of subscribers you get per day is a function of

- Number of viewers (more people leads to more people who may want to subscribe)

- Engagement Rate (what percentage of viewers subscribe, typically around 1-5%, more interesting streams have a higher engagement rate)

Beyond that, there are other forms of monetization, but these are significantly less meaningful. They include Turbo income (a viewer watches your stream while subscribed to Twitch Turbo), Cheer income ( a viewer can pay to cheer on a stream), etc.

Amortized Revenues

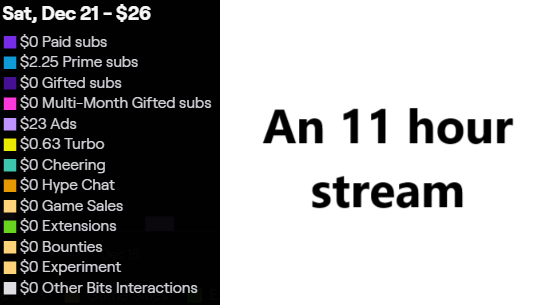

A 11 hour sample stream with 50 average viewers and $26 of revenue.

At 50 Average Viewers

| Revenue | Amortized Per Hour ($) |

|---|---|

| Ad Revenue | 2.09 |

| Subscriber Revenue | 0.21 |

| Miscellaneous | 0.06 |

| Total: | 2.36 |

In total, it leads to an hourly revenue of $2.36.

At 50 average viewers

| Amortized Per Hour ($) | |

|---|---|

| Costs | -1.14 |

| Revenue | 2.36 |

| Total: | 1.22 |

There are 720 hours in a month, leading to 1.22 x 720 = $878.4 a month, assuming that viewership patterns remain constant. This is not necessarily a realistic assumption (viewership can tail off over time), but it’s a good baseline to estimate potential profit patterns.

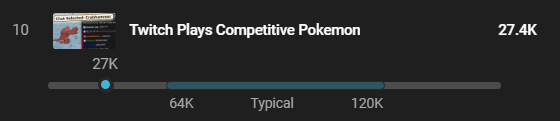

The biggest takeaway from a cost analysis point of view is that the number of viewers is the strongest factor determining profitability. 50 average viewers, all things considered, is terrible. A much larger stream, which can command large numbers of viewers, can run this 24/7 for increasingly higher profits. Empirically speaking, revenue scales with viewership at around a 1:1 ratio. Famously, the original Twitch Plays Pokemon averaged 80,000 viewers at the peak of its virality!

At a hypothetical 500 average viewers

| Amortized Per Hour ($) | |

|---|---|

| Costs | -1.14 |

| Revenue | 23.60 |

| Total: | 22.46 |

| Total Per Month: | 16,178.4 (Per Month) |

There are 720 hours in a month, leading to 22.46 x 720 = $16,178.4 a month, assuming that viewership patterns remain constant.

There is no other work beyond setting up the server, and then resetting the stream every 48 hours. It really makes you appreciate the economic leverage of software: you can scale to more users at significantly less or no cost compared to traditional industries.

The Chicken and the Egg Problem

It is not enough to make something you are proud of, but to have other people notice it too.

I hold two things to be true

- Twitch Plays Showdown is fundamentally a good and interesting idea

- The more active users there are, the more interesting it is.

The key problem is a problem known to startups as the Cold Start or Chicken and the Egg problem. Twitch Plays Showdown is fun where there are many people participating, but people will only participate if the activity is fun. You need people to make it fun, but you need fun to gather people. Trying to coordinate a crowd of 10,000 people is infinitely more interesting than trying to coordinate a crowd of 10 people and it is imperative to solve the chicken and egg problem.

Biggest Mistakes

Over the course of this project, there were a number of obstacles, setbacks, and learning experiences.

- Poor Intake Funnel. To solve the Chicken and the Egg problem, you need an effective intake funnel to attract people to the stream. My approach was centered around a YouTube video through a channel I own called Freezai, with 170,000 subscribers. I would advertise the stream there and get people to funnel through. But unexpectedly, nobody cared. At all.

The advertisement video severely underperformed.

My video about the stream was literally the worst performing video I’ve made this year. Twitch Plays has had a storied history of cultural relevance, but I clearly misunderstood what people cared about and what people were interested in.

Trying to isolate the issue is tricky, is the idea itself bad, or is it that I’m not the right person for the job, or is it something else entirely? Maybe my finger isn’t on the pulse as much as I thought it was.

- Latency management. With my best efforts, I was able to increase the turn timer to 10 seconds in the best and median case scenarios. But, that still means that half of the people are very likely having a poor time trying to interact with an environment that necessitates quick decision making. I also overestimated the amount of comfort people would have with 10 seconds to begin with. Just because I am comfortable with the game, doesn’t mean that a majority of people are able to make decisions that fast. Even if you were able to make these decisions quickly, it doesn’t mean you’re having a good time. This led to people who were interested in the concept, trying it, and then giving up because they couldn’t keep up. This is a recipe for disaster: A poor intake funnel, and then losing retention on the people who did make it through the funnel. Structurally, I’m not sure how to solve this. The turn timer comes for us all and any attempt at using more and more of the bank increases the likelihood that we run out of time and lose the game instead. Perhaps that is a risk worth taking though. Or maybe there are even more creative solutions, like finding a way to claw back timer by making quick moves on obvious turns.

- Pennywise, pound foolish especially with respect to infrastructure. It is very likely that I am overpaying for infrastructure costs. For all the effort I put in researching specifications, service providers, and collecting data, my biggest cost is paying for a Windows license. I refuse to believe that I could not do everything I’m doing on Linux, but I simply could not figure out how to solve GUI lag issues within a Cloud VM. Furthermore, using Google Cloud is like using a chainsaw to cut a piece of paper. Google’s infrastructure is great for businesses who need Google’s business logic to help power their high-stakes, large scale operations. But do I need to use them when all I have is a Twitch stream and some python scripts? Maybe I’d be better off with a different server provider who costs less but doesn’t have all the frills that I would never use. I’m paying for convenience, but I’m also paying for things I don’t need.

Future Works

While I believe in the concept, the initial failure of the project to drum up large amounts of viral interest is a concern. My plan is to pivot into using this software for more event-based activities, rather than a 24/7 live stream climbing a global leaderboard. For example, there could be events like “Can Twitch Beat [Top Player Name]?” Events solve two of the main issues faced so far:

- Events can concentrate users into specific time slots, instead of spreading them out over the day. This helps solve the Chicken and the Egg problem.

- Events can solve the latency problem by turning off the turn timer. The turn timer is used in games on the global leaderboard, but friendlies don’t need them turned on. A friendly match with a top player can have the stringent turn timer turned off and we could expand the window of opportunity to 20 or 30 seconds, leading to a greater number of people being able to enjoy Twitch Plays Showdown to its fullest.

Also, I am going to extend the functionality of the application to play all types of battles, instead of only the ones pre-programmed in. Different battle formats may have different options available, and instead of hardcoding these differences in, I am going to revamp the state controller to be able to detect and automatically handle different formats.

I am also going to package this software into an executable file so that anyone can use it, without prior technical knowledge. As of right now, to run my software, a user needs to know how to use the terminal and run Python scripts, which is out of the scope of most laymen.

Overall, I believe this is a good first step and I am excited to continue further as I improve on the first release of this application.